In their attempt to build a service portal to let users request on-demand application services based on a pay-per-use model, the team needed expert inputs on the overall approach and architecture of the platform. By the time I was onboarded, the product team had already performed 2 failed iterations.

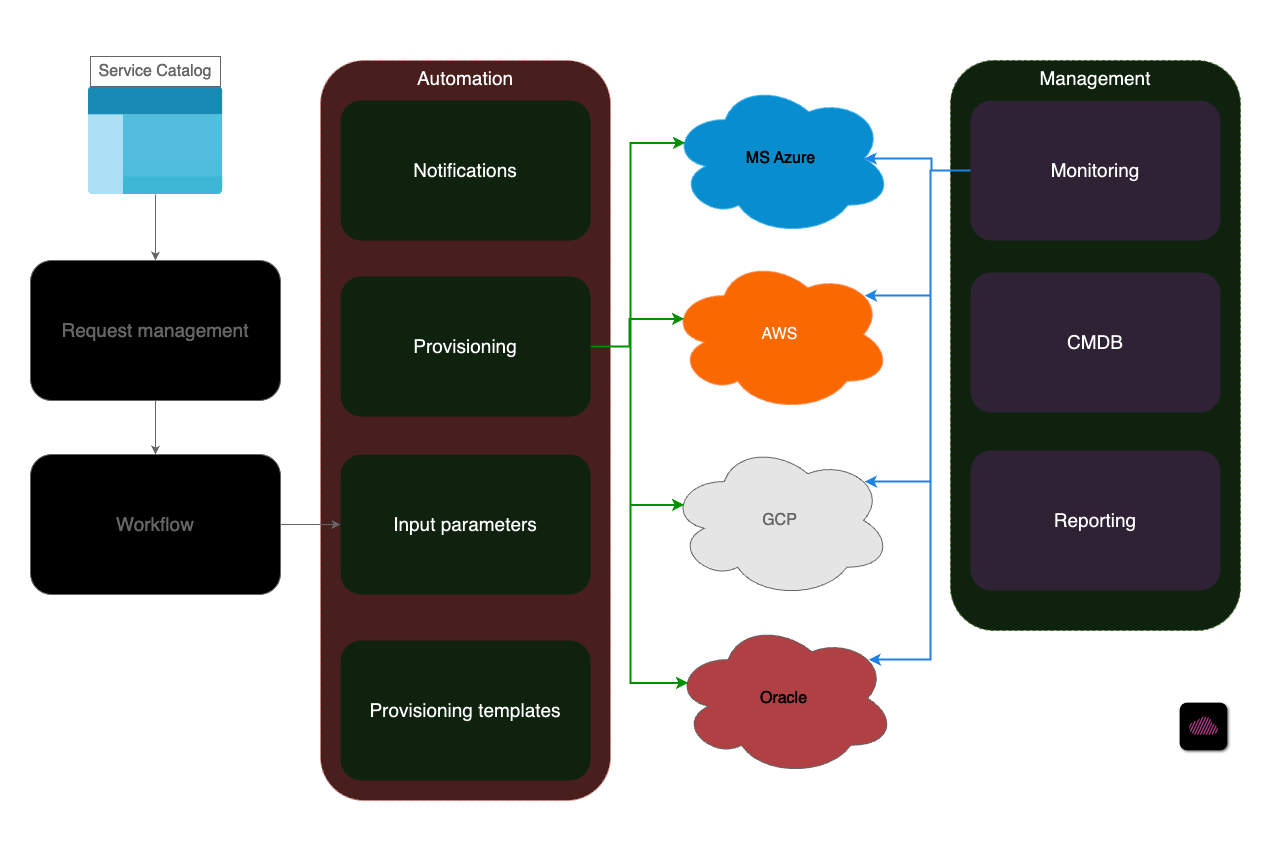

The goal here was to convert the request catalog into self-service mode – as much as possible – to improve processing time and cost for each request. The current request portal hosted multiple types of requests ranging from password reset to ordering a server. This initiative had multiple facets like costing and pricing with respect to cost centers, automation of logistical challenges, integrations with many 3rd party solutions, and licensing implications.

Instead of modifying the existing legacy setup, the management was keen on developing a new solution from ground up – which in my opinion is a good approach considering the overhaul they were aiming for. This would have helped in case things didn’t go as expected.

Previously

The organization’s internal portal was quite bulky in terms of code, resource utilization, and overall user experience owing to legacy tech. The system lacked efficiency as all the request fulfillment was still dependent on manual efforts. It was useful at the time it was developed in a way that it did induce process based execution, however, things could be much better with new technologies.

It was an ERP system (ticketing software) hosted internally, and would simply keep track of who requested what, to which team the ticket was currently assigned, indicated SLA for completion, etc. In the backend, there was a dedicated support team fulfilling these requests. A simple request for ordering a SQL database was being manually fulfilled in the target public cloud platform and the credentials were shared over the “confidential” email.

Opportunities

- While the system did offer benefits in terms of tracking of the requests, cost center management, etc. the manual efforts were quite time consuming. With the limited bandwidth of the support teams (operational cost in itself), they always relied on the time offered by SLAs to fulfill their tasks. At times, the teams were so busy that in certain seasons, it was normal to expect the breach of SLAs.

- There was no secure way to share credentials for the services being offered.

- Adding new features meant relying on support from multiple teams. The old code base grew to an extent where it did make sense to develop the system from scratch.

- There were limited reporting capabilities and management found it difficult to keep up with market trends. Generating custom reports again required manual efforts to join data from multiple tables and run a few queries. This also caused unnecessary redundancies as each department needed their own copies of data for reporting purposes.

Impact

Before I was hired for the project, the project scope was already defined. The team identified and listed catalog requests related to public cloud provisioning to be rolled out during the first go live. This made sense as the public cloud vendors offered multiple APIs and a strong ecosystem of products which made it easier for us to tackle first hand.

- Phase 1: After getting introduced, I worked on a small PoC, demonstrating IaC technologies like Terraform which can be used to provision resources. Using infrastructure as code, it was possible to define each offering in the form of the templates. It was possible to reuse these templates, using input variables to capture details like name, description, technical dimensions like memory, storage, cpu, etc. This was appreciated by the team and decided to go ahead with it.

- Phase 2: While planning for the real product, I helped the team with automating the provisioning process of these templates via CI/CD pipelines using Gitlab. Given the security constraints, it was decided to host the Gitlab runners on-premise. The process of creating Terraform templates, onboarding them on the CI/CD pipelines, while managing the dependencies for each request item was time consuming as it involved analysis of all the parameters required for provisioning. Without this, the request would simply fail.

- Phase 3: To keep track of all the resources being provisioned and deprovisioned by the users, it was important to gather and process all events. This required a robust monitoring solution in the long run, but for the time being we relied on AWS CloudWatch. Further, for VM based requests, I developed a client utility in NodeJS to capture system logs for anomaly detection.

- Phase 4: Reporting was another aspect as part of the new solution. In this phase I delivered a dedicated micro-service to deliver reporting data to the frontend to be rendered into various graphs. Data was sourced from multiple sources, and was about cost, resource utilization, issues, etc. The micro service exposed an API to serve real-time JSON formatted data.

- Phase 5: The new catalog was introduced in the production with limited requests from the scope to get the real user feedback. This feedback helped us shape our roadmap for the remaining services, but it also came along with positive reviews on user experience. Overall direction of the project was set and I slowly phased out and worked with the team on standby mode.

Wins

The entire scope of the project was quite huge as they had 1000s of request items to be analyzed and onboarded on the new platform. On the other hand, by this time (end of a year’s time) the platform itself had only proven its worth, but it still needed more time for maturing. However, the project team was set on the right path which they continued to pursue later as well.

- The efficiency of the ITSM system drastically improved across the organization for the request items modernized in this scope.

- Reduced complaints from the users since their infrastructure requests were provisioned within minutes.

- Management was able to free up the support bandwidth and could now think of utilizing the same on other upgrades.

- The system proved to be much secure on multiple levels owing to proper credential management, known vulnerabilities were inherently taken care of.

- Reporting mechanism improved. At this point it did not relieve the redundant copies, but the solution looked promising when full revamp of the catalog would be done in the future.